Artificial intelligence may appear complex, but at its core, it relies on surprisingly simple building blocks. These building blocks are called tokens, and they shape how language models read, process, and generate meaning. To truly understand how AI thinks, it’s essential to explore how tokens function within modern AI systems.

What Are Tokens in AI?

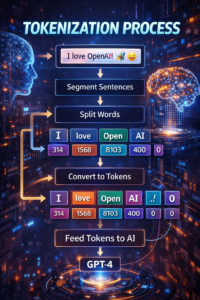

When exploring What are Tokens? A deep-dive into one of the key concepts of AI, it becomes clear that tokens are small pieces of information—often parts of words, full words, or even punctuation marks—that AI models use to interpret language. Instead of processing sentences all at once, models like GPT break text into tokens to make patterns easier to analyze.

For example, “thinking” might be divided into “think” and “ing.” This method helps AI detect relationships across languages, grammar, and context. Tokens act as the foundation of everything an AI system understands, no matter how simple or advanced the task may be.

How Tokens Shape AI Reasoning

To truly Understand how Tokens work, imagine an AI model reading text—one token at a time. Each token is converted into numerical vectors that represent meaning. AI then analyzes relationships between these numerical patterns to predict what comes next.

Tokens help the model:

-

Recognize context

-

Understand user intent

-

Predict likely responses

-

Maintain structure in sentences

This structured approach is what allows AI to hold conversations, translate languages, summarize long texts, and generate creative content.

Why Tokens Are Critical for AI Performance

Every AI system is designed around token limits, computational costs, and processing efficiency. This leads naturally into the idea of Why do Tokens matter?, because the number and complexity of tokens determine how much information an AI can handle at once.

Tokens matter for several reasons:

-

Accuracy: More tokens allow deeper context understanding.

-

Cost: Most AI services charge based on token usage.

-

** Performance:** Large token counts require more computing resources.

-

Memory: Tokens define how much the AI can “remember” in a conversation.

Tokens, in simple terms, act as both the currency and the language that AI models use to understand human input.

Real-World Examples of Token Use

Here are practical scenarios where tokens play a crucial role:

-

Customer support AI: Interprets messages token by token for accurate replies.

-

Chat platforms: Use tokens to maintain conversation flow.

-

Search engines: Break queries into tokens for relevance detection.

-

Content creation tools: Depend on tokens to structure writing and tone.

These applications show how deeply tokens influence AI reasoning and output.

Challenges Associated with Tokens

Although tokens are powerful, understanding What are the challenges of tokens? reveals some limitations in the way AI processes language.

The main challenges include:

-

Ambiguity: Some words hold many meanings, making token interpretation tricky.

-

Tokenization differences: Not all languages tokenize equally well.

-

Cost scaling: More tokens mean higher computational expense.

-

Context window limits: AI models can only process a specific number of tokens at a time.

-

Bias risks: Tokens from biased data may influence model output.

These challenges explain why ongoing research continues to refine how AI tokenization works.

The Future of Tokenization in AI

As models grow more advanced, tokenization methods will continue evolving. Future systems may use:

-

More efficient subword tokenization

-

Improved multilingual token understanding

-

Compression algorithms to reduce token count

-

Tokenless models that rely on continuous representations

Each of these improvements will bring AI closer to understanding language like humans do.

Conclusion

Tokens may seem small, but they are central to how AI interprets and generates language. By breaking text into meaningful pieces, tokens help AI understand context, intention, and structure. They influence cost, performance, accuracy, and overall intelligence. As AI expands into more industries, token optimization will remain a crucial part of developing smarter, faster systems.

Also read:

Top 10 AI Cloud Business Management Platform Tools in 2026

FAQs

Q1.How do tokens work with AI?

Tokens are small pieces of text—like parts of words, full words, or symbols—that AI breaks your message into. The model converts each token into numbers, analyzes patterns, and then predicts the next token. This step-by-step process is how AI reads, understands, and responds to you.

Q2.How does AI actually think?

AI doesn’t think like humans. It doesn’t feel or reason emotionally. Instead, it calculates patterns from data it learned during training. When you type something, it compares your tokens to millions of examples and predicts the most likely answer. Its “thinking” is mathematical, not conscious.

Q3.How is a token different than a word in AI?

A token isn’t always a full word. Some words are split into multiple tokens, while tiny words or punctuation might be single tokens. Tokens are simply the units AI uses to process language, while words are natural language units humans use. Tokens help AI understand text more efficiently.

Explore for more TECHWORLD